The official launch of iOS 18 is almost here. At Apple’s Glowtime event, in which the new iPhone 16 models were introduced alongside the Apple Watch Series 10 and some new AirPods, the company confirmed that the official release date for iOS 18 would be September 16.

However, if you’re getting excited about the launch of iOS 18 because you want to try out the new Apple Intelligence features, you’re going to have a bit of a wait. Apple has confirmed what was already suspected; the first Apple Intelligence features won’t arrive until the iOS 18.1 update, which Apple says will happen “next month”.

Even when Apple Intelligence does finally arrive on the iPhone, there will initially only be a limited set of AI features, such as generative writing tools, the ability to summarize documents and emails, improved transcription, and smart replies.

Some of the most significant Apple Intelligence features will take longer to arrive, with Apple keen to ensure they’re working correctly before they’re released. There’s no clear timeline for when we’ll get access to further Apple Intelligence features, with Apple’s website saying only that they will arrive “in the months to come”, but there are some clues as to when they might land. Here are six of the best Apple Intelligence features that we’re going to have to wait some time for.

1 Image Playground (likely arriving in December)

The app that allows you to create your own images is likely a few months away from release

Apple

According to Bloomberg’s Mark Gurman, we won’t get Apple’s AI-powered Image Playground app until the release of iOS 18.2. Image Playground is a generative AI tool that allows you to create AI-generated images in three different styles: “Animation,” “Illustration,” and “Sketch.” You can type a description of the image you want to create, choose from a range of themes, places, or costumes, and even create an image based on a person from your Photos library. All the images are created on-device within a dedicated app.

Image Playground is a generative AI tool that allows you to create original images on your iPhone in three different styles: ‘Animation,’ ‘Illustration,’ and ‘Sketch.’

Gurman says the iOS 18.2 update will likely be released in December, following the iOS 18.1 update in October. With this in mind, we may need to wait until the end of the year before we can start using Image Playground to create amusing images of our friends and family.

2 Genmoji (likely arrival December)

You may need to wait until December for custom emoji

Apple

Another image generation feature that’s part of Apple Intelligence is the Genmoji feature. This is a pretty awful name for a feature that could be quite useful. Emojiare intended to help us express emotions within text-based messages, since it’s easy to misread the tone of a message and take it a different way to how it’s intended.

With the Genmoji feature, you’ll be able to type a description of the emoji that you want and see a preview of how the new emoji will look.

On the whole, emojis work well for telling when someone is joking or being sarcastic, but unfortunately, not everyone understands the same emojis in the same way. For example, the seemingly harmless “thumbs up” emoji can be seen as a simple “OK” to some and a passive-aggressive attack to others. Being able to create your own emoji may go some way to reducing the confusion.

With the Genmoji feature, you’ll be able to type a description of the emoji that you want and see a preview of how it looks. You can keep tweaking until you have the perfect fit, and just like with Image Playground, you can even create Genmoji based on people from your Photos library.

Sadly, it looks like we’ll have to endure the standard set of emoji for the time being. According to Gurman, Genmoji is another feature that will not appear until iOS 18.2, which means it will probably arrive in December.

3 ChatGPT integration into Siri (likely arrival December)

Free access to ChatGPT via Siri should be here by the end of the year

Apple/Pocket-lint

Siri has been around for a long time. Initially a standalone app, Apple made Siri apart of iOS in 2011 as a built-in feature of the iPhone 4S. After the initial excitement surrounding the voice-activated assistant’s launch, Siri has sadly stagnated for more than a decade. Other voice assistants have surpassed its abilities, and modern AI chatbots such as ChatGPT and Google Gemini make Siri seem like tech from another era.

Siri is finally getting a much-needed update in iOS 18, however. While the voice-activated assistant’s abilities will get a significant boost, it still won’t have the same capabilities as other AI chatbots. Apple knows that people who have used apps such as Meta AI and Claude expect a similar performance level from Siri. To this end, Apple has struck a deal with OpenAI, the company behind ChatGPT, to add ChatGPT’s abilities to the iPhone.

When you ask Siri a question, the assistant will try to answer it themselves. However, if the question seems beyond Siri’s abilities, it asks if you want ChatGPT to help.

When you ask Siri a question, the assistant will try to answer it. However, if the question seems beyond Siri’s abilities, it asks if you want ChatGPT to help. If you agree, Siri will turn to ChatGPT to answer your question. Using ChatGPT in iOS 18 will be free, although if you are a paid ChatGPT user, you can access additional features that are part of your subscription.

This collaboration between Siri and ChatGPT promises to make using Siri much more powerful and more accessible, but unfortunately, we’ll have to wait a bit longer. Apple CEO Tim Cook confirmed that ChatGPT integration will come to iOS 18 “by the end of the calendar year,” so it seems likely it will arrive as part of iOS 18.2 in December.

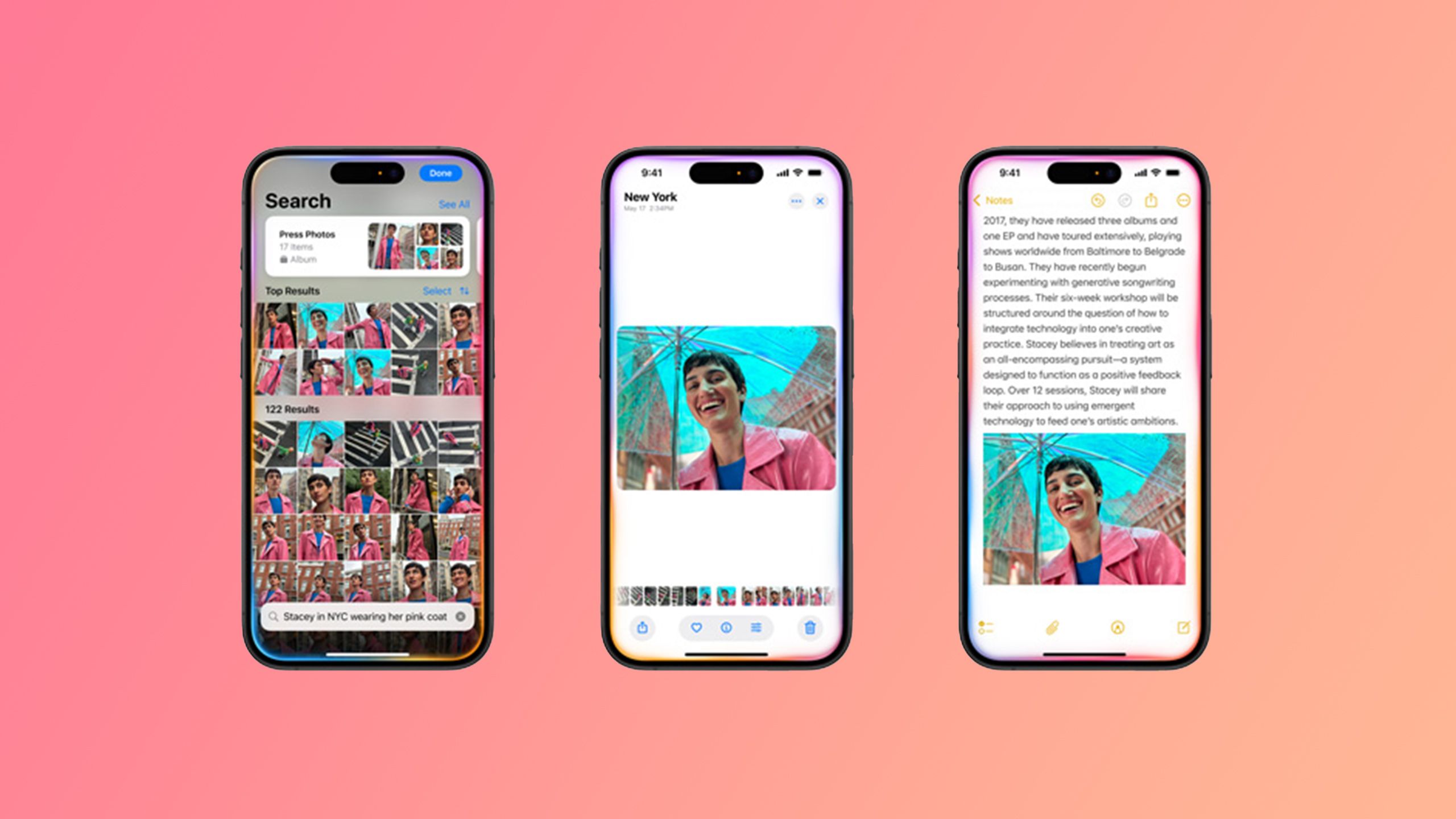

4 A more contextual Siri (likely to arrive in 2025)

An AI-powered Siri with contextual understanding might me delayed until next year

Apple

Siri will be able to turn to ChatGPT for help if needed, but the assistant will also have a lot of new skills of her own. One of those new skills is the ability to have a greater understanding of personal context, which has the potential to make Siri significantly more useful.

For example, you’ll be able to ask Siri questions such as “what time does Mom’s flight get in?” and Siri will be able to pull up the flight details that your mom sent you via email. Instead of having to open Mail, search for the correct email, then open it and find the appropriate section that contains the flight information. All you’ll need to do is ask Siri, and the voice-activated assistant will provide you with the answer.

Instead of having to open Mail, search for the right email, then open it and find the appropriate section that contains the flight information, all you’ll need to do is ask Siri, and the voice-activated assistant will provide you with the answer.

This is much more like the personal assistant that Siri promised to be when it first launched, but that never really materialized. It remains to be seen how well this feature works in the real world, but it could potentially make Siri a far more useful tool.

There’s some disagreement about when this feature is likely to arrive. According to Gurman, none of the major Siri features will arrive until next year, and this would seem to fall into that category. However, the Wall Street Journal claims that the ability to tap into personal context will arrive before the end of the year.

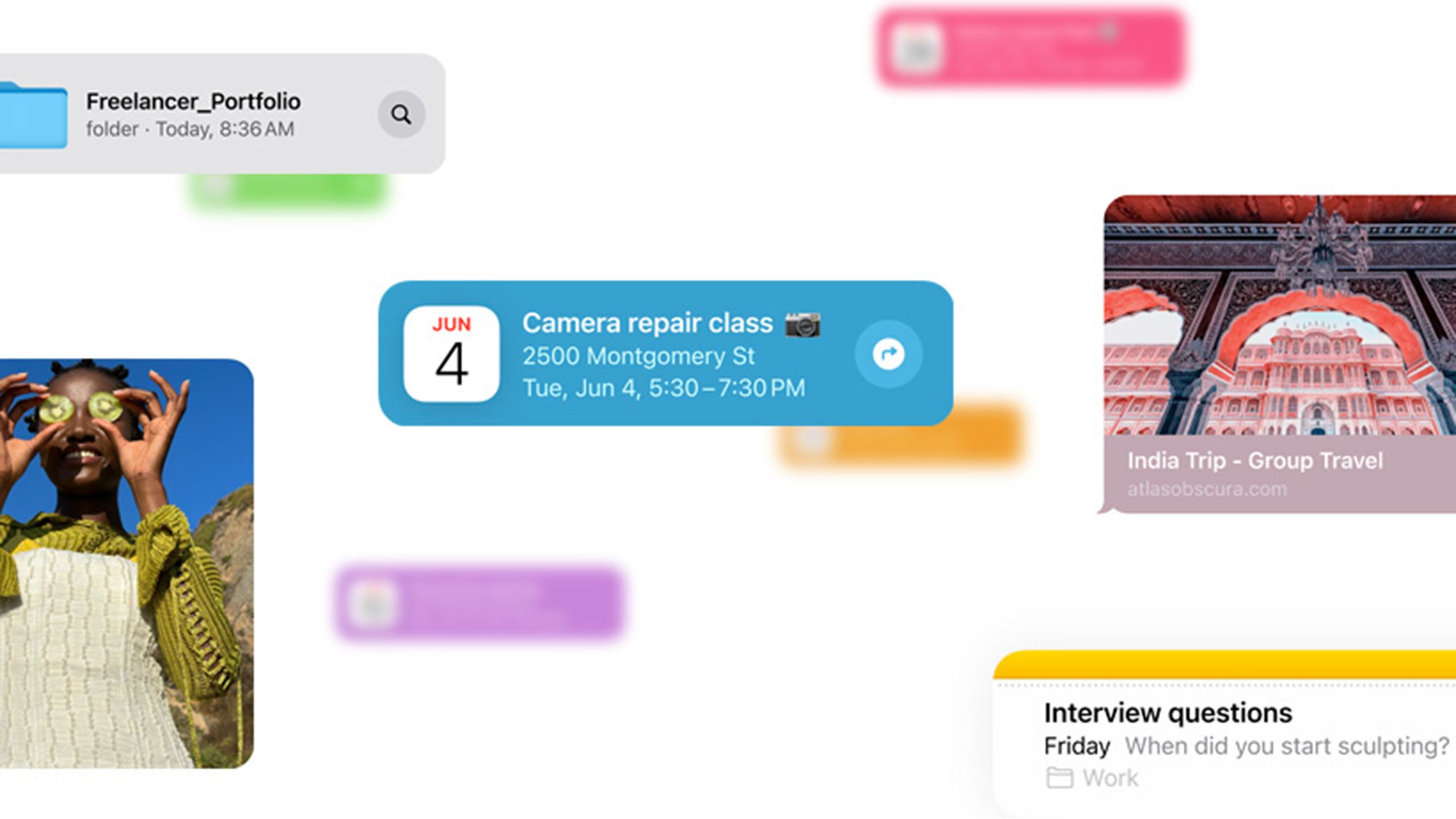

5 On-screen awareness for Siri (likely arrival 2025)

Siri will be able to take information from your iPhone screen

Another very useful feature that’s coming to Siri is on-screen awareness. This will give Siri the ability to see what’s currently displayed on your iPhone screen and extract useful information.

For example, if someone sent you their new address details in Messages, you’d need to copy the information from the message, open the Contacts app, find the relevant contact, open that contact for editing, and add the address in the appropriate field. With on-screen awareness, you only need to ask Siri to add the address to their contact card. Siri will extract the address information displayed on your iPhone screen, understand the context of who the message is from, and add that new address to the appropriate contact.

Most reports agree that this feature is unlikely to appear until sometime next year. How soon we get access to it in 2025 remains to be seen.

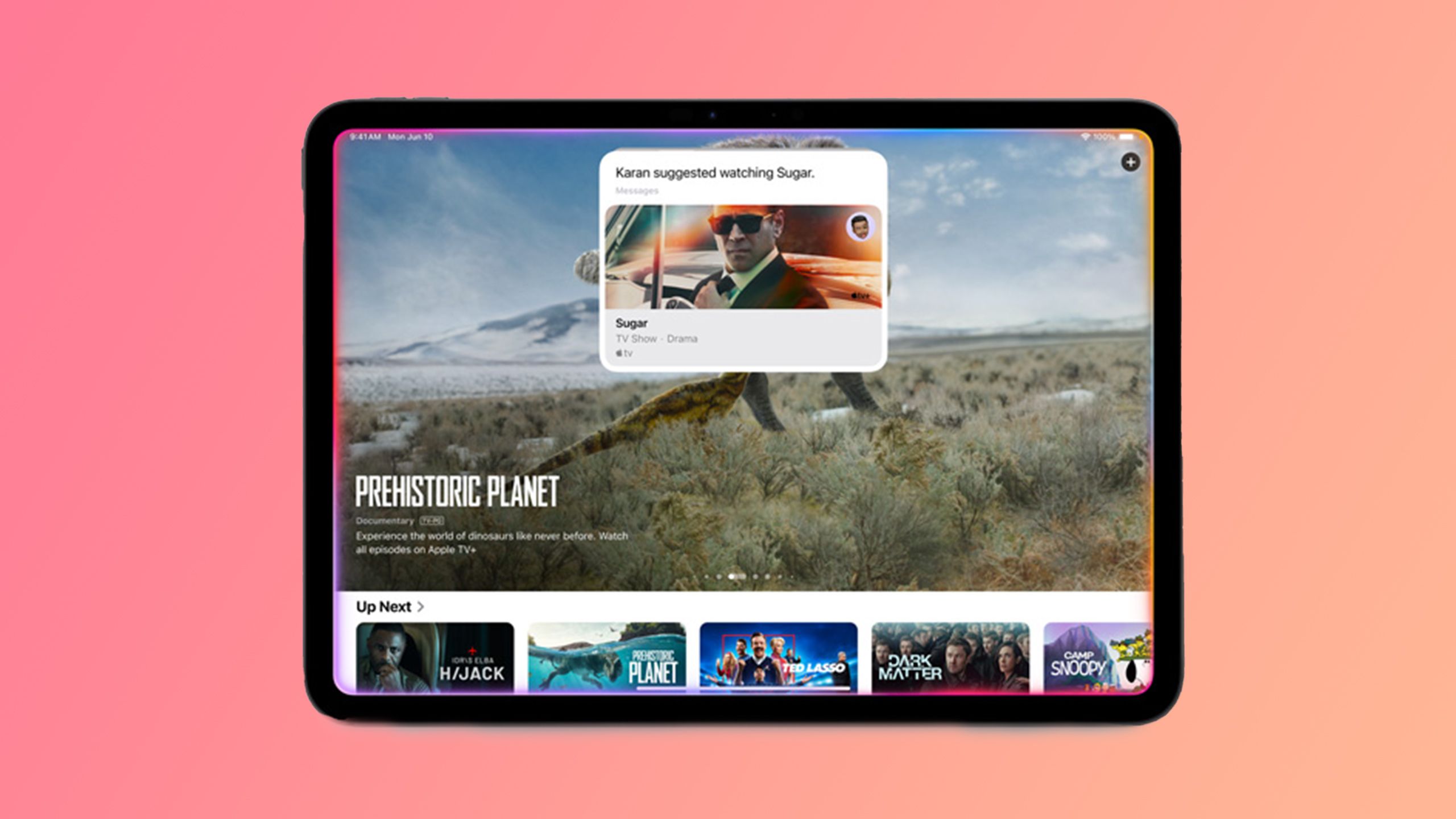

6 A more deeply integrated Siri (likely to come in 2025)

Siri will be able to have more control over apps

Apple/Pocket-lint

Another useful feature coming to Siri in iOS 18 is the ability to take action within a wide range of apps. Currently, you can use Siri in a limited way with apps, such as asking the assistant to open a specific app, but you don’t have much control over the app’s actions.

With iOS 18, Siri will eventually be able to not only to have deeper control of apps on your iPhone but will also be able to take action across different apps. For example, if you’ve taken a photo, you could ask Siri to “make this photo pop and then add it to my Vacation Ideas note.” Siri would perform the relevant edit in the Photos app (the request to make the photo “pop” is an example pulled directly from Apple’s own website, indicating that Siri will be able to understand quite broad requests) and then automatically add the photo to your note, without you having to open any apps yourself.

This is another feature that we’ll probably have to wait for, however. It seems likely that deep control across apps via Siri will not be available until next year.

Trending Products